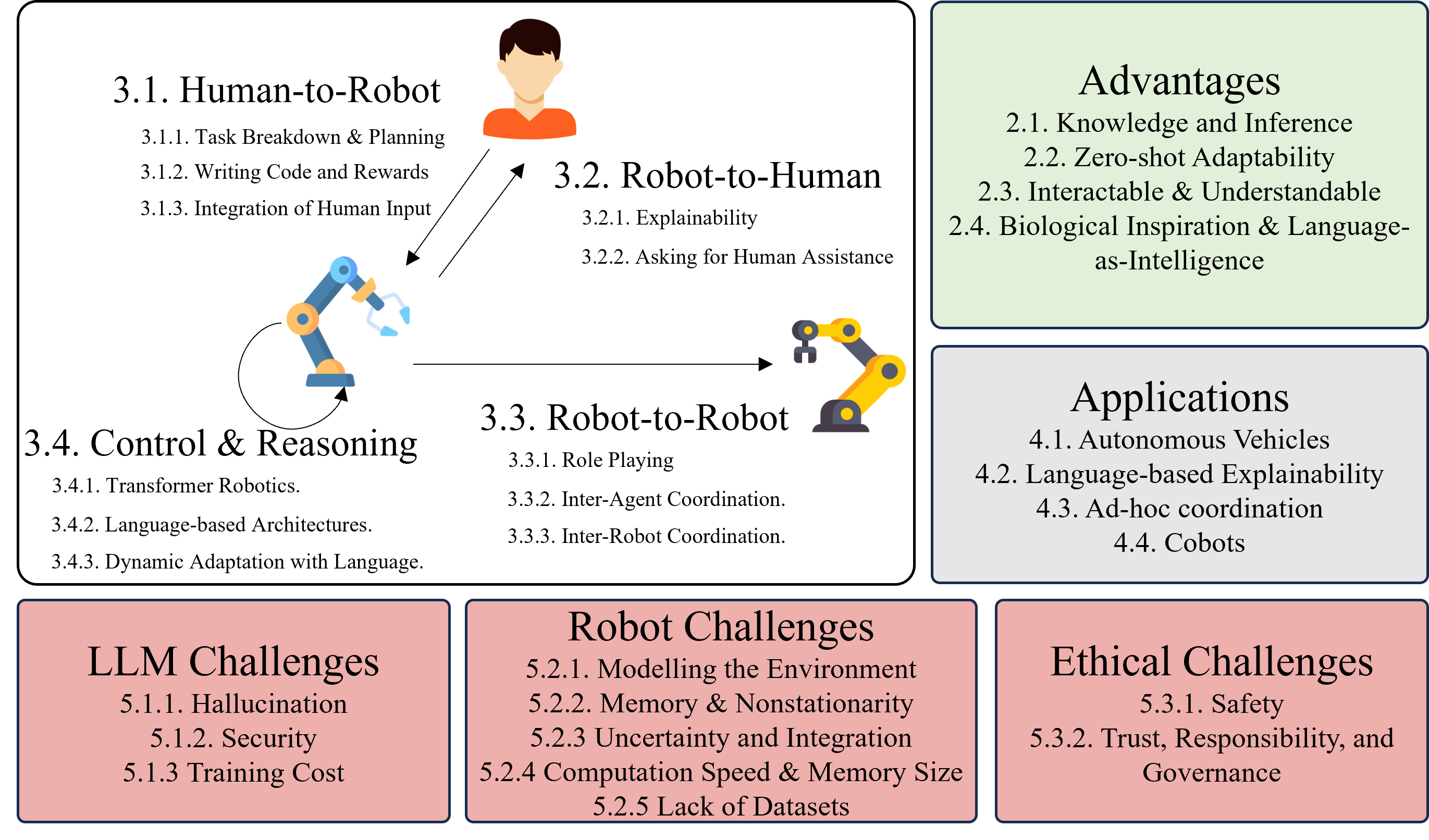

Embodied robots which can interact with their environment and neighbours are increasingly being used as a test case to develop Artificial Intelligence. This creates a need for multimodal robot controllers that can operate across different types of information, including text. Large Language Models are able to process and generate textual as well as audiovisual data and, more recently, robot actions. Language Models are increasingly being applied to robotic systems; these Language-Based robots leverage the power of language models in a variety of ways. Additionally, the use of language opens up multiple forms of information exchange between members of a human-robot team. This survey motivates the use of language models in robotics, and then delineates works based on the part of the overall control flow in which language is incorporated. Language can be used by a human to task a robot, by a robot to inform a human, between robots as a human-like communication medium, and internally for a robot's planning and control. Applications of language-based robots are explored, and numerous limitations and challenges are discussed to provide a summary of the development needed for the future of language-based robotics.

Graphical Abstract: Interaction between robots can be broken into four categories: Human-to-Robot (a human instructing the robot with language), Robot-to-Human (a robot explaining or validating its actions with the human), Robot-to-Robot (robots communicating with each other), and Internal (a robot using language internally). We also discuss the advantages, some applications, and limitations to LLMs generally, in robotics, and ethically.